Above: SBM-20

Above: other SBM-20 design? I thought this was STS-5 originally but its clearly labeled SBM-20

This article shows how to characterize a GM tube as well as how to build a Geiger counter out of basic lab equipment.

I've been trying to get a neutron detector working to show my fusor works. Unfortunately its been the most time consuming and frustrating part of the project. After overpowering and subsequently killing a $50 He-3 tube, its time to practice on something similar but less expensive: the Geiger-Müller (GM) tube.

Surplus from Russia, the $10 SBM-20 is the most common radiation tube on the surplus market (along with the similar STS-5). Despite their low cost they are well built and provide crisp signals.

While I'll quote Wiki a few times in here I've mostly been reading

PANDA. PANDA focuses on neutron detectors but a lot of it is based on conventional tubes. In fact, I think the Wiki page has a number of incorrect statements about energy resolution and such (or at least misleading if its a terminology issue). I'll write my congressman.

Theory

GM tubes are filled with low pressure gas at high voltage. They essentially operate as a triggered spark gap: when a high energy particle excites the gas it momentarily produces electric current. This current can be averaged to determine pulse rate or individual events can be counted.

While Geiger counters operate in this spark gap mode, its possible to excite other modes. In more detail, here's a theoretical GM plot

from Wiki:

In the so called "ion chamber region" no amplification occurs and thus only very small amounts of current => weak signal is produced. As the voltage is increased, the tube enters the "proportional region" where output current is dependent on input energy. That is, a 100 keV gamma rays induce less current than a 1 MeV gamma rays.

As voltage is increased further, amplification saturates to form the "Geiger region". Geiger counters operate here since it produces strong, consistent signals that don't vary much across voltage. This makes units less sensitive to manufacturing tolerances and aging that bring components out of spec. All particles now produce identical looking pulses regardless of energy.

Thin metals block alpha particles. Therefore, metal walled GM tubes like the SBM-20 only detect beta and gamma particles.

SBM-20 specifications

The

SBM-20 has the following key specs:

- Minimum Anode Resistor: 1.0 M

- Recommended Anode Resistor: 5.1 M

- Recommended Operating Voltage: 400 V

- Operating Voltage Range: 350 - 475 V

- Initial voltage: 260 - 320 V

- Plateau length: > 100 V

- Maximum Plateau Slope: 10 %/100 V

- Minimum Dead Time at 400 V: 190 us

- Life (pulses): > 2e10

For these tests I'm using a 4.7 - 4.8 M resistor (what I had on hand). This is about 8% off of the characterized value but well within the recommended range.

Counting

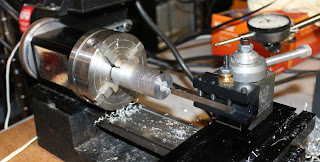

Slightly simpler, I initially counted pulses instead of measuring current. Schematic:

Specifically I'm using:

- SBM-20 GM tube

- Ortec 456 HV power supply

- Agilent 54622D scope (floppy drive FTW)

- HP 53131A counter

- 10 uC Cs-137 source (Spectrum Techniques, 2015)

Instead of adjacent, I placed the source 1 inch from the tube to make the experiment more repeatable. This minimizes effects small movements have such as placement position and vibration.

I started with characterizing startup voltage. I found SNR approaches 1 at about 240 V:

Above: 240 V

Above: 250 V w/ ~10 mV signal

This is roughly consistent with the datasheet (Initial voltage: 260 - 320 V).

Now with a signal I setup the counter threshold. I found that the noise floor is about 3 mV across all voltages. If I

shielded the probes and such properly it would likely go down.

Anyway, the counter requires 5 mV steps so I set the threshold to 5 mV (rising edge). Unfortunately, I found that, according to the scope, the counter doesn't trigger until 15.3 mV (ie has 10 mV offset error). As a result, the first recorded count is at 270 V despite the scope seeing counts at 250 V.

Counts increased roughly linearly up to about 1200 V (note: I took fewer samples later in the graph artificially making it appear smoother).

After that pulses start ringing, drastically increasing CPM. More on that later.

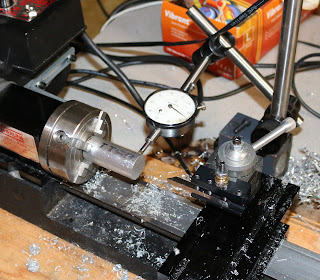

As I thought the GM tube was going to give out much earlier I didn't beef up the resistors to account for the extra HV. At 2000 V, the current limiting resistor failed (not too surprising) which then caused the sense resistor to fail (above). Resistor damage:

- 4.7 M => 2.1 M

- 4.7 k => 486 k

The 4.7 M resistor was replaced with 4x 1.2 M (4.8 M) and the 4.7 k resistor resistor was replaced with a new unit.

Measuring current

A single particle imparts a varying amount of charge. However, when

averaged over time we get electrical current which is easier to measure. Along with our sense resistor, adding a capacitor in parallel forms an RC filter to smooth out the current, making it easier to measure.

Very similar to before with the following tweaks:

- 4.7 M resistor => 4.8 M as 4x 1.2 M

- 4.7 k resistor replaced

- 4.7 uF capacitor

Note RC = 4.7e3 * 4.7e-6 = 22 ms with f ~= 3000 => T = 0.3 ms. That is, the capacitor takes much larger to discharge than the rate pulses are coming in.

I wired it up and placed the source nearly against the GM tube so that it provides a large signal. This is what I got:

Where:

- Red lines: min/max recommended operating voltages

- Green line: recommended operating voltage

This is basically the rightmost third of

the Wiki image with an extra inflection.

The red line above is where continuous discharge begins. By 2000 V it looks like it was starting to saturate and may have leveled out if I kept going.

The green line marks the end of the plateau region. While the datasheet says that its approximately 350 - 475 V, 600 - 1100 V looks better. In particular, the curve is pretty steep at 350 V and therefore not ideal to operate at. Even with the recommended current limiting resistor I'm guessing that it would not have evened out yet. Possibly due to tube age?

Regardless, it demonstrates power supply stability importance: no matter operating voltage, current (rate) depends on voltage. If you want stage readings you must supply a stable voltage.

GM region slope is about 3.5 count / V. At 600 V I got 1758 counts => 100 * (3.5 * 100) / 1758 ~= 20 % / 100 V vs datasheet spec of 10 % / 100 V. However, at 1000 V I got 3153 => 100 * (3.5 * 100) / 3153 = 11 % / 100 V, roughly in spec. Lets call it 15% / 100 V.

The area to the left of the green line is likely the proportional region. Given the relatively high noise in my setup, I was unable to observe the ion chamber region.

Continuous discharge

Wiki says "If the applied voltage is higher than the plateau's, a continuous glow

discharge is formed and the tube cannot detect radiation." I was not able to get a continuous glow discharge to form (strong quenching agents?), but I was able to demonstrate that the tube can detect radiation far above the plateau region.

Above: unstable at 1200 V

At around 1200 V I started to see the pulses "ringing". This explains the increased counts at higher voltages observed earlier.

Above: unstable at 2000V

As the voltage is increased further, the rings appear to ring themselves and thus take much longer to stop.

Above: 2000 V w/ no source

Even without a source a considerable number of pulses are produced. However, a valid count can be generated by subtracting this out. I first set the counter to 5.125 V (max threshold) to filter out most noise. With the ~3000 CPM Cs-137 source I got about 33765 CPM. When it was removed I got 30670 CPM, a difference of 3095 CPM. Additionally, when I added a strong Am-241 source it shot up to 49879 CPM. Therefore, despite the tube being quite noisy at 2000 V its still usable.

Of course, this range is still quite bad for tube life (2e10 events). Assume all events degrade the tube equally. At 2000 V I got 2486306 CPM ~= 2.5e5 CPM (mostly background). This yields 2e10 count / 2.5e5 count/min = 55 days operation. While this won't immediately kill the tube its unnecessarily stressful.

Dead time

Dead time is the time after a pulse that you can't get another pulse. I suppose I could have looked to find the closest pulses but, in general, this is difficult to measure. As a first order approximation, lets consider dead time equivalent to pulse width.

Dead time is a function of voltage. I didn't measure it specifically, but here are some examples to illustrate.

Above: 250 V. Rise to peak: 10 us. Rise to decay inflection: 30 us

Above: 400 V. Rise to peak: 0.75 us. Rise to decay inflection: 1.75 us

Above: 1200 V. Rise to peak: 0.25 us. Rise to decay inflection: 0.75 us

As voltage increases pulse get both higher and faster. Pulse rise in 10 us at 250 V, 0.75 us at 400 V, and 0.25 us at 1200 V with similar gross decay times. However, it always takes around 100 us for the signal to fully recover to its original DC value. Possibly due to stray capacitance or slower positive ions recovering.

The main takeaway is that higher count rates are possible at higher tube voltages as long as you don't enter continuous discharge.

Reversing

The tube is clearly marked with a "+" on one of the terminals. But theoretically it should collect charge on either terminal. So what happens if you reverse it?

At +400 V I recorded 3068 CPM. At -400V I got 1381, about 45% of the + reading. When I removed the source altogether I got 9 CPM. So clearly its working as a detector, albeit less efficiently.

I tried to take current measurements and by 700 V spikes were already occurring.

By 900 V the signal was unstable as they no longer settled to a steady state. Maybe oscillating with the capacitor?

Overall appears to be less efficient and works under a much narrower range. Presumably GM tubes are optimized for positive voltage though so maybe it could be improved a lot if you intentionally tried to design a negative center GM tube. In any case, the biggest takeaway is that under standard 400 V operation the tube works. This is actually unfortunate as you might not notice you installed it backwards (mechanically possible).

Pulse height variation

I didn't actually try spectroscopy, but I did measure pulse height min/max.

If these are related to energy deposited, this should roughly indicate energy resolution capability.

Measured

from the usable tube range: 250 V to 1100 V. As tube voltage

increased, the height difference between the lowest and highest pulse

also grew linearly. Once again, an actual spectrum would be required to

see if this difference is actually useful.

Summary

Datasheet attributes claimed vs what I measured:

- Recommended Operating Voltage: 400 V

- Optimal: about 800 V

- Recommended is not the same as optimal, but 400 V was on the edge of plateau region

- Probably enough for intended applications

- Operating Voltage Range: 350 - 475 V

- 250 V - 1100 V

- Lifetime concerns at higher voltages?

- Initial voltage: 260 - 320 V

- Plateau length: > 100 V

- Maximum Plateau Slope: 10 %/100 V

- Minimum Dead Time at 400V: 190 us

- Wouldn't maximum dead time be more useful?

- 100 us measured?

Noting:

- Old tube

- ~4.7 M sense resistor instead of 5.1 M

Future work

Its difficult to dispute the datasheet plateau reading since I didn't run with exactly the recommended resistor nor with a fresh tube. To this end I'd like to re-run the current sweep with a fresh tube using a 5.1 M resistor.

In any case, I'm going to characterize a larger tube and tweak the setup to get the ion chamber region. If instead of using a small sense resistor, I capacitively couple the HV side I should get a much stronger signal. I also have a CAMAC high voltage power supply that should help me automate sweeps (poor / no documentation though).

For new tests, first I'd like to create some SBM-20 gamma spectra. I'd also like to estimate efficiency. Finally, might be interesting to saturate a tube in an x-ray machine and see if anything interesting happens.

Suggestions? Want to send me radiation stuff? Drop me a line

Until next time